Predicting Wine Quality with Deep Learning

Deep Learning Problem

Train the data to check the wine quality

Step 1: Problem Statement

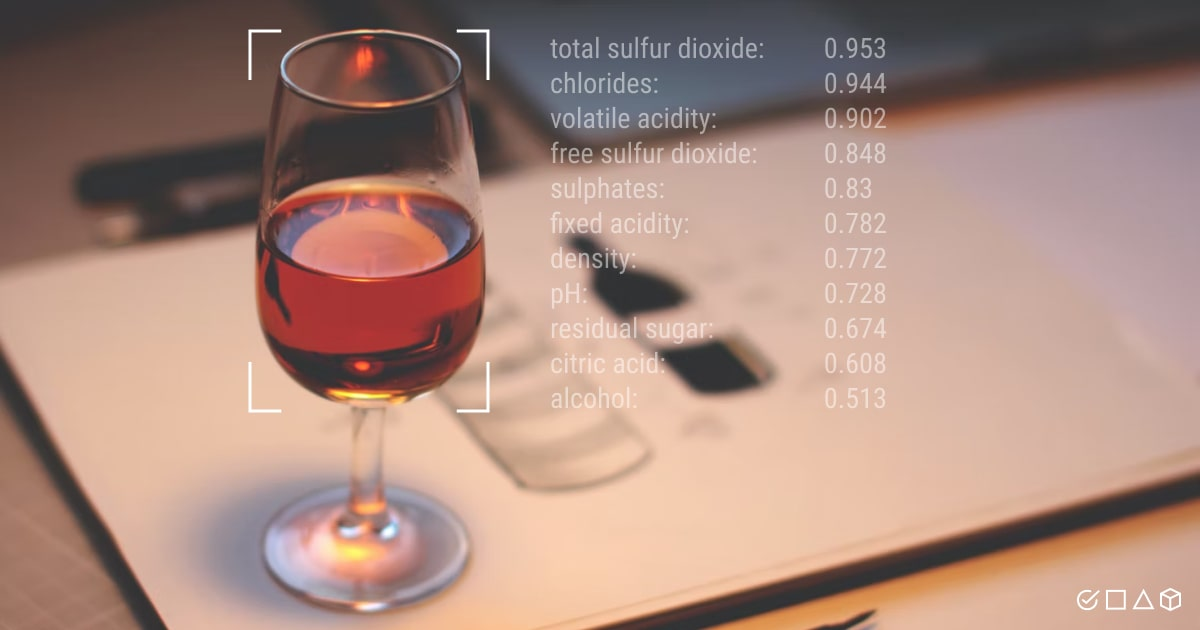

We’ll use the Wine Quality Dataset (CSV) to predict wine quality (regression) or classify wine as good/bad (binary classification).

Step 2: Set Up Google Colab

Go to Google Colab..

Click File → New Notebook.

Rename the notebook (e.g.,

MLP_Wine_Quality_Tutorial or whatever you want).

Step 3: Import Libraries

Now we will see in detail what these libraries do:

1. Data Handling Libraries

These libraries are useful for managing, analyzing, and manipulating datasets.

numpy (np):

NumPy is used for numerical computations in Python.

It provides powerful array structures and mathematical functions.

Example: It helps in working with arrays and matrices efficiently.

pandas (pd):

Pandas is a data manipulation library that provides DataFrame and Series structures.

It allows for easy reading, writing, and manipulation of structured data.

Example: Reading CSV files, filtering data, handling missing values, etc.

2. Data Visualization Libraries

These libraries help in understanding data distribution and trends.

matplotlib.pyplot (plt):

It is used for creating static, animated, and interactive visualizations.

Example: Drawing line plots, bar charts, scatter plots, etc.

seaborn (sns):

Built on Matplotlib, Seaborn provides enhanced visualization capabilities.

It is useful for drawing more attractive and informative statistical graphs.

Example: Heatmaps, box plots, violin plots, etc.

3. Machine Learning Model Preparation

These libraries help in preprocessing data and splitting it for training and testing.

train_test_split (from sklearn.model_selection):

Splits data into training and testing sets.

Example: 80% training data and 20% testing data.

StandardScaler (from sklearn.preprocessing):

Standardizes features by removing the mean and scaling to unit variance.

Useful when dealing with machine learning models that are sensitive to different data scales.

4. Performance Metrics

These libraries help in evaluating the model's performance.

accuracy_score (from sklearn.metrics):

Measures classification accuracy (used for classification problems).

Example: accuracy_score(y_true, y_pred), where y_true is actual labels and y_pred is predicted labels.

mean_squared_error (from sklearn.metrics):

Measures the difference between predicted and actual values (used for regression problems).

Example: Lower MSE indicates better model performance.

5. Deep Learning Libraries (TensorFlow & Keras)

These libraries are used to build and train deep learning models.

tensorflow (tf):

An open-source library for machine learning and deep learning.

Provides tools for model training and optimization.

keras (from tensorflow):

A high-level neural network API running on top of TensorFlow.

Simplifies the process of building and training deep learning models.

Step 4: Load Data

We’ll use the Wine Quality dataset from Kaggle.

Download the dataset from here (click "Download").

In Colab, click the folder icon (📁) on the left → "Upload" the winequality-red.csv file.

Explore Data

Visualize Data

Step 5: Preprocess Data

(1) Define Features (X) and Target (y)

Let’s do binary classification:

Good wine: quality >= 7

Bad wine: quality < 7

Post a Comment